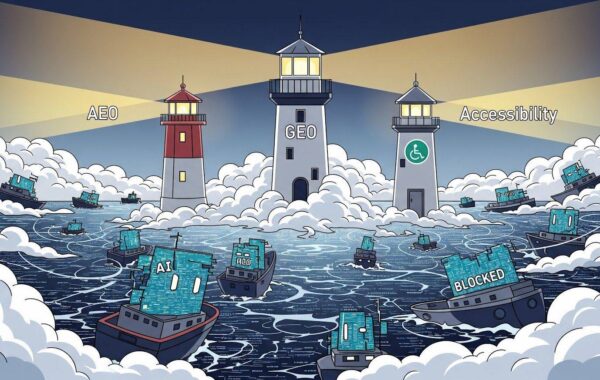

When managing a website, most of us are familiar with the robots.txt file. It’s a small but powerful tool that tells search engines and crawlers which parts of a site they should (or shouldn’t) explore, often including a sitemap to highlight what’s most important.

But what if we took that same idea and applied it to Web Governance?

That’s where the concept of ‘govern.txt’ comes in – a proposed framework for making governance metadata as accessible as technical metadata. Instead of hiding in spreadsheets or scattered notes, essential governance details could live right alongside the website itself.

What could it cover?

The possibilities are broad, but some examples include:

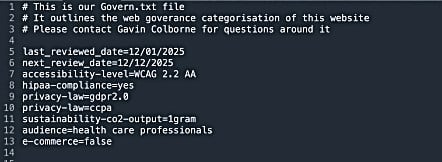

- Accessibility: What level of WCAG compliance does this site aim for (A, AA, AAA, 2.1, 2.2)? When was the site last tested with a screen reader?

- Privacy & Security: Which jurisdiction’s privacy law applies (GDPR, CCPA, HIPAA, etc.)? What security standards are relevant?

- Ownership & Review: Who owns this website? When was it last reviewed? When is the next review scheduled?

- Sustainability: What is the site’s target CO₂ output per page load?

- Purpose & Audience: Is it an e-commerce site, an information hub, delivery service? Or designed for a specific audience like healthcare professionals, artists, frontline workers, etc.?

- Clear Policies: Links or references to key policies (terms, cookie, modern slavery, accessibility, etc.) so users don’t have to hunt for them.

All of this could be stored in a simple text file, structured but human-readable so that crawlers, automated audits, and even future AI tools could instantly understand the governance framework behind a site.

Automated website crawlers like Little Forest, ScreamingFrog, SiteImprove and Google could use this information to alert if sites are not compliant, then flag pages that don’t meet requirements.

Why does it matter?

For organisations running hundreds of websites, tracking governance through spreadsheets quickly becomes messy and inefficient. By embedding this information directly with the site, you create a single accurate source, thus easy to scan, easy to update, and consistent across the whole web estate.

Like robots.txt did for search engines, govern.txt could do for governance: a universal, lightweight way of communicating standards and responsibilities.

This is still very much a brainstorm idea, and we would love to hear your thoughts.

- What information would you want to see included in a govern.txt file?

- How could it make your life easier when managing multiple sites?

We have opened up the conversation on LinkedIn so you can drop your comments below!